Build a Neural Network using ChemML

We use a sample dataset from ChemML library which has the SMILES codes and Dragon molecular descriptors for 500 small organic molecules with their densities in \(kg/m^3\).

[1]:

import warnings

warnings.filterwarnings("ignore")

import os

os.environ['TF_XLA_FLAGS'] = '--tf_xla_enable_xla_devices'

from chemml.datasets import load_organic_density

molecules, target, dragon_subset = load_organic_density()

After scaling the data using the Scikit-learn StandardScaler function, we use a 1:3 split for training:testing data.

[2]:

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

xscale = StandardScaler()

yscale = StandardScaler()

X_train, X_test, y_train, y_test = train_test_split(dragon_subset.values, target.values, test_size=0.25, random_state=42)

X_train = xscale.fit_transform(X_train)

X_test = xscale.transform(X_test)

y_train = yscale.fit_transform(y_train)

Fit the chemml.model.MLP model to the training data

[3]:

from chemml.models import MLP

mlp = MLP(engine='tensorflow',nfeatures=X_train.shape[1], nneurons=[32,64,128], activations=['ReLU','ReLU','ReLU'],

learning_rate=0.01, alpha=0.002, nepochs=100, batch_size=50, loss='mean_squared_error',

is_regression=True, nclasses=None, layer_config_file=None, opt_config='sgd')

mlp.fit(X = X_train, y = y_train)

2023-05-09 22:47:34.533525: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: SSE4.2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-05-09 22:47:34.549286: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x7fa11084d090 initialized for platform Host (this does not guarantee that XLA will be used). Devices:

2023-05-09 22:47:34.549304: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version

2023-05-09 22:47:34.638092: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:116] None of the MLIR optimization passes are enabled (registered 2)

Epoch 1/100

8/8 [==============================] - 0s 786us/step - loss: 1.6591

Epoch 2/100

8/8 [==============================] - 0s 802us/step - loss: 0.6901

Epoch 3/100

8/8 [==============================] - 0s 719us/step - loss: 0.6365

Epoch 4/100

8/8 [==============================] - 0s 722us/step - loss: 0.4933

Epoch 5/100

8/8 [==============================] - 0s 1ms/step - loss: 0.4814

Epoch 6/100

8/8 [==============================] - 0s 739us/step - loss: 0.4217

Epoch 7/100

8/8 [==============================] - 0s 936us/step - loss: 0.4152

Epoch 8/100

8/8 [==============================] - 0s 750us/step - loss: 0.3982

Epoch 9/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3968

Epoch 10/100

8/8 [==============================] - 0s 1ms/step - loss: 0.4011

Epoch 11/100

8/8 [==============================] - 0s 825us/step - loss: 0.3865

Epoch 12/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3745

Epoch 13/100

8/8 [==============================] - 0s 739us/step - loss: 0.3663

Epoch 14/100

8/8 [==============================] - 0s 911us/step - loss: 0.3646

Epoch 15/100

8/8 [==============================] - 0s 766us/step - loss: 0.3650

Epoch 16/100

8/8 [==============================] - 0s 783us/step - loss: 0.3580

Epoch 17/100

8/8 [==============================] - 0s 746us/step - loss: 0.3539

Epoch 18/100

8/8 [==============================] - 0s 662us/step - loss: 0.3493

Epoch 19/100

8/8 [==============================] - 0s 909us/step - loss: 0.3472

Epoch 20/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3431

Epoch 21/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3417

Epoch 22/100

8/8 [==============================] - 0s 842us/step - loss: 0.3373

Epoch 23/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3365

Epoch 24/100

8/8 [==============================] - 0s 893us/step - loss: 0.3345

Epoch 25/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3318

Epoch 26/100

8/8 [==============================] - 0s 930us/step - loss: 0.3278

Epoch 27/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3273

Epoch 28/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3241

Epoch 29/100

8/8 [==============================] - 0s 971us/step - loss: 0.3216

Epoch 30/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3223

Epoch 31/100

8/8 [==============================] - 0s 957us/step - loss: 0.3184

Epoch 32/100

8/8 [==============================] - 0s 2ms/step - loss: 0.3158

Epoch 33/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3132

Epoch 34/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3102

Epoch 35/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3088

Epoch 36/100

8/8 [==============================] - 0s 965us/step - loss: 0.3065

Epoch 37/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3052

Epoch 38/100

8/8 [==============================] - 0s 1ms/step - loss: 0.3038

Epoch 39/100

8/8 [==============================] - 0s 866us/step - loss: 0.3013

Epoch 40/100

8/8 [==============================] - 0s 2ms/step - loss: 0.2985

Epoch 41/100

8/8 [==============================] - 0s 4ms/step - loss: 0.2968

Epoch 42/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2963

Epoch 43/100

8/8 [==============================] - 0s 2ms/step - loss: 0.2930

Epoch 44/100

8/8 [==============================] - 0s 931us/step - loss: 0.2910

Epoch 45/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2885

Epoch 46/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2876

Epoch 47/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2868

Epoch 48/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2839

Epoch 49/100

8/8 [==============================] - 0s 766us/step - loss: 0.2827

Epoch 50/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2819

Epoch 51/100

8/8 [==============================] - 0s 766us/step - loss: 0.2781

Epoch 52/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2784

Epoch 53/100

8/8 [==============================] - 0s 749us/step - loss: 0.2764

Epoch 54/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2746

Epoch 55/100

8/8 [==============================] - 0s 940us/step - loss: 0.2721

Epoch 56/100

8/8 [==============================] - 0s 894us/step - loss: 0.2716

Epoch 57/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2691

Epoch 58/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2665

Epoch 59/100

8/8 [==============================] - 0s 2ms/step - loss: 0.2652

Epoch 60/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2636

Epoch 61/100

8/8 [==============================] - 0s 800us/step - loss: 0.2623

Epoch 62/100

8/8 [==============================] - 0s 987us/step - loss: 0.2605

Epoch 63/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2584

Epoch 64/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2571

Epoch 65/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2564

Epoch 66/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2540

Epoch 67/100

8/8 [==============================] - 0s 759us/step - loss: 0.2531

Epoch 68/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2522

Epoch 69/100

8/8 [==============================] - 0s 765us/step - loss: 0.2490

Epoch 70/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2476

Epoch 71/100

8/8 [==============================] - 0s 743us/step - loss: 0.2460

Epoch 72/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2447

Epoch 73/100

8/8 [==============================] - 0s 722us/step - loss: 0.2426

Epoch 74/100

8/8 [==============================] - 0s 943us/step - loss: 0.2416

Epoch 75/100

8/8 [==============================] - 0s 750us/step - loss: 0.2406

Epoch 76/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2387

Epoch 77/100

8/8 [==============================] - 0s 683us/step - loss: 0.2368

Epoch 78/100

8/8 [==============================] - 0s 870us/step - loss: 0.2364

Epoch 79/100

8/8 [==============================] - 0s 731us/step - loss: 0.2343

Epoch 80/100

8/8 [==============================] - 0s 925us/step - loss: 0.2326

Epoch 81/100

8/8 [==============================] - 0s 3ms/step - loss: 0.2307

Epoch 82/100

8/8 [==============================] - 0s 2ms/step - loss: 0.2302

Epoch 83/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2283

Epoch 84/100

8/8 [==============================] - 0s 861us/step - loss: 0.2277

Epoch 85/100

8/8 [==============================] - 0s 903us/step - loss: 0.2268

Epoch 86/100

8/8 [==============================] - 0s 747us/step - loss: 0.2249

Epoch 87/100

8/8 [==============================] - 0s 860us/step - loss: 0.2236

Epoch 88/100

8/8 [==============================] - 0s 902us/step - loss: 0.2222

Epoch 89/100

8/8 [==============================] - 0s 810us/step - loss: 0.2204

Epoch 90/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2188

Epoch 91/100

8/8 [==============================] - 0s 723us/step - loss: 0.2177

Epoch 92/100

8/8 [==============================] - 0s 658us/step - loss: 0.2162

Epoch 93/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2154

Epoch 94/100

8/8 [==============================] - 0s 751us/step - loss: 0.2141

Epoch 95/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2123

Epoch 96/100

8/8 [==============================] - 0s 722us/step - loss: 0.2116

Epoch 97/100

8/8 [==============================] - 0s 1ms/step - loss: 0.2100

Epoch 98/100

8/8 [==============================] - 0s 699us/step - loss: 0.2089

Epoch 99/100

8/8 [==============================] - 0s 888us/step - loss: 0.2070

Epoch 100/100

8/8 [==============================] - 0s 692us/step - loss: 0.2061

Saving the ChemML model

[4]:

mlp.save(path=".",filename="saved_MLP")

File saved as ./saved_MLP_chemml_model.json

Loading the saved ChemML model

[5]:

from chemml.utils import load_chemml_model

loaded_MLP = load_chemml_model("./saved_MLP_chemml_model.json")

print(loaded_MLP)

<chemml.models.mlp.MLP object at 0x7fa14c8ef6a0>

[6]:

loaded_MLP.model

[6]:

<tensorflow.python.keras.engine.sequential.Sequential at 0x7fa1489d1dc0>

Predict the densities for the test data

[7]:

import numpy as np

y_pred = loaded_MLP.predict(X_test)

y_pred = yscale.inverse_transform(y_pred.reshape(-1,1))

Evaluate model performance using chemml.utils.regression_metrics

[8]:

from chemml.utils import regression_metrics

# For the regression_metrics function the inputs must have the same data type

metrics_df = regression_metrics(y_test, y_pred)

print("Metrics: \n")

print(metrics_df[["MAE", "RMSE", "MAPE", "r_squared"]])

Metrics:

MAE RMSE MAPE r_squared

0 10.894117 13.492043 0.857629 0.98108

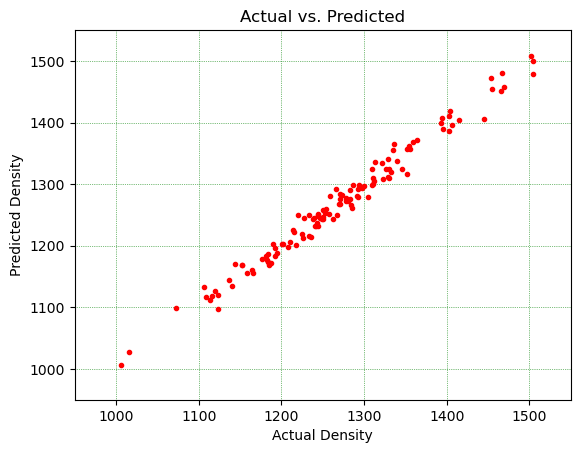

Plot actual vs. predicted values

[9]:

from chemml.visualization import scatter2D, SavePlot, decorator

import pandas as pd

%matplotlib inline

df = pd.DataFrame()

df["Actual"] = y_test.reshape(-1,)

df["Predicted"] = y_pred.reshape(-1,)

sc = scatter2D('r', marker='.')

fig = sc.plot(dfx=df, dfy=df, x="Actual", y="Predicted")

dec = decorator(title='Actual vs. Predicted',xlabel='Actual Density', ylabel='Predicted Density',

xlim= (950,1550), ylim=(950,1550), grid=True,

grid_color='g', grid_linestyle=':', grid_linewidth=0.5)

fig = dec.fit(fig)

# print(type(fig))

sa=SavePlot(filename='Parity',output_directory='images',

kwargs={'facecolor':'w','dpi':330,'pad_inches':0.1, 'bbox_inches':'tight'})

sa.save(obj=fig)

fig.show()

The Plot has been saved at: ./images/Parity.png

If the Underlying (TensorFlow/PyTorch) model is required …

[10]:

base_mlp = mlp.get_model()

[11]:

print(type(base_mlp))

<class 'tensorflow.python.keras.engine.sequential.Sequential'>

Sometimes you may need the keras model without the output layer (for e.g., for transfer learning)

[12]:

keras_mlp_no_output = mlp.get_model(include_output=False)

print(type(keras_mlp_no_output))

print(keras_mlp_no_output.summary())

<class 'tensorflow.python.keras.engine.sequential.Sequential'>

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 32) 6432

_________________________________________________________________

dense_1 (Dense) (None, 64) 2112

_________________________________________________________________

dense_2 (Dense) (None, 128) 8320

=================================================================

Total params: 16,864

Trainable params: 16,864

Non-trainable params: 0

_________________________________________________________________

None